I’m currently comparing different Python visualisation libraries for a thesis, including different interfaces to Bokeh. One part is comparing the total CPU runtimes of each library to generate the same map product using the same data. The performance benchmarking is done with cProfile.

The basic setup is this: After the data has been prepared and transformed to where each of the libraries can handle it directly (the prepGDFs() function), I’m wrapping all figure and axes definitions, including the central call to bokeh.plotting.figure, in a function called renderFigure() which is then wrapped with a decorator that basically does this:

p = cProfile.Profile()

p.enable() # start cProfiling

value = func(*args, **kwargs) # execute renderFigure()

p.disable() # stop cProfiling

p.dump_stats() # write cProfile to file

Due to different libraries employing different rendering strategies, I decided to try and force each of them to render the figure in VSCode rather than, say, some in VSCode and some in a browser window. To achieve this, a few adjustments were made to each of the libraries (see the table under point 5 of the ‘How are libraries being compared?’ section which Discourse won’t let me link to as a new user…). For Bokeh, this meant adding

bokeh.io.output.output_notebook()

…

bokeh.io.show(plot)

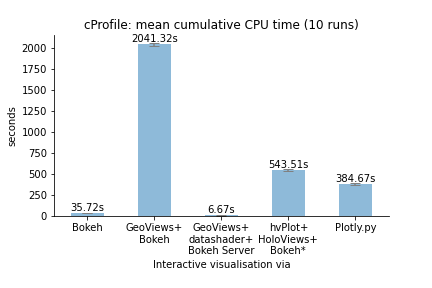

Now, the results are currently quite favorable to the vanilla Bokeh interface, as you can see below for the rendering of a large 144,000 polygon dataset.

The only puzzling thing is this: vanilla Bokeh’s mean CPU runtime measured by cProfile seems to differ substantially from when the figure is in fact rendered in the VSCode interpreter: I manually observed (basically, stopwatched) the time between the completion of the prior prepGDFs() function completing and the plot being fully rendered to be around 125 seconds instead of the roughly 35 seconds recorded by cProfile for renderFigure() alone (125 seconds would still be quite a bit ahead of Plotly.py).

Would you have any insights on why this could be? What is happening between cProfile thinking the renderFigure() function is complete and the figure actually showing up in the interpreter? Is there any call I can make to Bokeh which would ensure the entirety of the process is captured by cProfile?

Many thanks!

PS: I also have other questions touching on why the different Bokeh interfaces (vanilla Bokeh, GeoViews, and hvPlot) result in such widely differing file sizes when saving the figure to disk (see the table below the screen captures at the bottom of the README which I also am not allowed to link to here…). Any thoughts on that would also be greatly appreciated!