Hi guys,

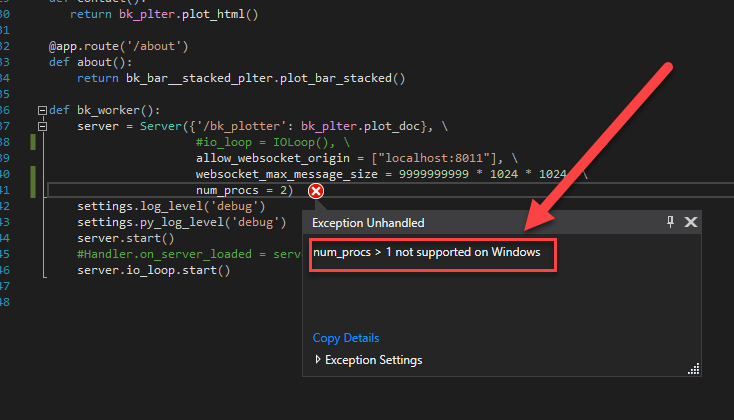

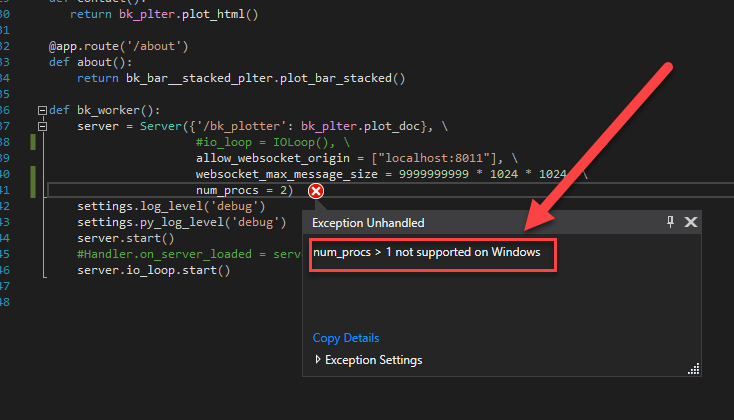

Currently, I am working on my project performance improvement, and try to set num_procs. unfortunately, this error appears. Could someone help me out. thanks a lot

Hi guys,

Currently, I am working on my project performance improvement, and try to set num_procs. unfortunately, this error appears. Could someone help me out. thanks a lot

Hi Peng,

There is nothing to do I am afraid, the message is self-explanatory. On windows, num_proc does not support any value other than 1. This is due to limitations in Tornado (which Bokeh is built on), that are outside our control. You can refer to the relevant GH issue and discussion here:

Multiprocessing in Windows is not available (ERROR:module 'os' has no attribute 'fork') · Issue #7229 · bokeh/bokeh · GitHub

If you need to "scale out" when running on Windows, then the only option I can suggest is to run multiple Bokeh server processes behind a load balancer e.g. Nginx can be used to accomplish this. A full deployment behind Nginx, using the least-connections balancing strategy, can be studied here:

GitHub - bokeh/demo.bokeh.org: Hosted Bokeh App Demos

There's alot of extraneous things related to the use of Salt for automating that deployment, so I will say the most relevant bit of the nginx config is this:

upstream demos {

least_conn; # Use Least Connections strategy

{% for i in range(0, NUM_SERVERS) %}

server 127.0.0.1:51{{ '%.2d' | format(i|int) }}; # Bokeh Server {{ i }}

{% endfor %}

}

Which consolidates a bunch of bokeh servers, all started on different ports, into one "logical" endpoint.

Thanks,

Bryan

On Jan 10, 2019, at 09:34, peng wang <[email protected]> wrote:

Hi guys,

Currently, I am working on my project performance improvement, and try to set num_procs. unfortunately, this error appears. Could someone help me out. thanks a lot

<2019-01-10_10-31-12.png>

--

You received this message because you are subscribed to the Google Groups "Bokeh Discussion - Public" group.

To unsubscribe from this group and stop receiving emails from it, send an email to [email protected].

To post to this group, send email to [email protected].

To view this discussion on the web visit https://groups.google.com/a/continuum.io/d/msgid/bokeh/db7b68f9-61ca-489e-a8db-d6eb5bd56586%40continuum.io\.

For more options, visit https://groups.google.com/a/continuum.io/d/optout\.

<2019-01-10_10-31-12.png>

Hi there,

thanks for your feedback which is always valuable and useful. The purpose that I ask similar question again and again is about my project performance. I create the data analysis web application based on Bokeh + Flask, and plot many charts(below). You could imagine the code size. Unfortunately, as charts are added more and more, performance is worse and worse. That is, everytime when I launch the web application, it takes around 30 seconds to get web ready. and if I manipulated the dateranger slider, it takes so long to render all charts associated. I am tweaking on it and trying to find any good way to solve this issue. welcome any comments on it?

On Thursday, January 10, 2019 at 10:54:32 AM UTC-7, Bryan Van de ven wrote:

Hi Peng,

There is nothing to do I am afraid, the message is self-explanatory. On windows, num_proc does not support any value other than 1. This is due to limitations in Tornado (which Bokeh is built on), that are outside our control. You can refer to the relevant GH issue and discussion here:

[https://github.com/bokeh/bokeh/issues/7229](https://github.com/bokeh/bokeh/issues/7229)If you need to “scale out” when running on Windows, then the only option I can suggest is to run multiple Bokeh server processes behind a load balancer e.g. Nginx can be used to accomplish this. A full deployment behind Nginx, using the least-connections balancing strategy, can be studied here:

[https://github.com/bokeh/demo.bokeh.org](https://github.com/bokeh/demo.bokeh.org)There’s alot of extraneous things related to the use of Salt for automating that deployment, so I will say the most relevant bit of the nginx config is this:

upstream demos { least_conn; # Use Least Connections strategy {% for i in range(0, NUM_SERVERS) %} server 127.0.0.1:51{{ '%.2d' | format(i|int) }}; # Bokeh Server {{ i }} {% endfor %} }Which consolidates a bunch of bokeh servers, all started on different ports, into one “logical” endpoint.

Thanks,

Bryan

On Jan 10, 2019, at 09:34, peng wang [email protected] wrote:

Hi guys,

Currently, I am working on my project performance improvement, and try to set num_procs. unfortunately, this error appears. Could someone help me out. thanks a lot

<2019-01-10_10-31-12.png>

–

You received this message because you are subscribed to the Google Groups “Bokeh Discussion - Public” group.To unsubscribe from this group and stop receiving emails from it, send an email to [email protected].

To post to this group, send email to [email protected].

To view this discussion on the web visit https://groups.google.com/a/continuum.io/d/msgid/bokeh/db7b68f9-61ca-489e-a8db-d6eb5bd56586%40continuum.io.

For more options, visit https://groups.google.com/a/continuum.io/d/optout.

<2019-01-10_10-31-12.png>

by the way, I use Threading almost everywhere, like

#20190104 start

uHelper.update_score_card_plot_queue = queue.Queue()

uHelper.update_score_card_plot_event = threading.Event()

update_score_card_plot_thread = Thread(name=‘update_score_card_plot_thread’, \

target = lambda q, arg1, arg2, arg3, arg4, \

arg5, arg6, arg7, arg8, \

arg9, arg10: \

q.put(score_card.update_score_card_plot_charts(arg1, \

arg2, \

arg3, \

arg4, \

arg5, \

arg6, \

arg7, \

arg8, \

arg9, \

arg10)), \

args = (uHelper.update_score_card_plot_queue, \

doc, \

uHelper.update_score_card_plot_event, \

uHelper.canada_rigs_plot_source, \

uHelper.canada_rigs_number_source, \

uHelper.canada_rigs_rank_source, \

uHelper.usa_rigs_plot_source, \

uHelper.usa_rigs_number_source, \

uHelper.usa_rigs_rank_source, \

None, \

None))

update_score_card_plot_thread.start()

uHelper.update_score_card_plot_event.set()

#20190104 end

``

On Thursday, January 10, 2019 at 12:08:25 PM UTC-7, peng wang wrote:

Hi there,

thanks for your feedback which is always valuable and useful. The purpose that I ask similar question again and again is about my project performance. I create the data analysis web application based on Bokeh + Flask, and plot many charts(below). You could imagine the code size. Unfortunately, as charts are added more and more, performance is worse and worse. That is, everytime when I launch the web application, it takes around 30 seconds to get web ready. and if I manipulated the dateranger slider, it takes so long to render all charts associated. I am tweaking on it and trying to find any good way to solve this issue. welcome any comments on it?

On Thursday, January 10, 2019 at 10:54:32 AM UTC-7, Bryan Van de ven wrote:

Hi Peng,

There is nothing to do I am afraid, the message is self-explanatory. On windows, num_proc does not support any value other than 1. This is due to limitations in Tornado (which Bokeh is built on), that are outside our control. You can refer to the relevant GH issue and discussion here:

[https://github.com/bokeh/bokeh/issues/7229](https://github.com/bokeh/bokeh/issues/7229)If you need to “scale out” when running on Windows, then the only option I can suggest is to run multiple Bokeh server processes behind a load balancer e.g. Nginx can be used to accomplish this. A full deployment behind Nginx, using the least-connections balancing strategy, can be studied here:

[https://github.com/bokeh/demo.bokeh.org](https://github.com/bokeh/demo.bokeh.org)There’s alot of extraneous things related to the use of Salt for automating that deployment, so I will say the most relevant bit of the nginx config is this:

upstream demos { least_conn; # Use Least Connections strategy {% for i in range(0, NUM_SERVERS) %} server 127.0.0.1:51{{ '%.2d' | format(i|int) }}; # Bokeh Server {{ i }} {% endfor %} }Which consolidates a bunch of bokeh servers, all started on different ports, into one “logical” endpoint.

Thanks,

Bryan

On Jan 10, 2019, at 09:34, peng wang [email protected] wrote:

Hi guys,

Currently, I am working on my project performance improvement, and try to set num_procs. unfortunately, this error appears. Could someone help me out. thanks a lot

<2019-01-10_10-31-12.png>

–

You received this message because you are subscribed to the Google Groups “Bokeh Discussion - Public” group.To unsubscribe from this group and stop receiving emails from it, send an email to [email protected].

To post to this group, send email to [email protected].

To view this discussion on the web visit https://groups.google.com/a/continuum.io/d/msgid/bokeh/db7b68f9-61ca-489e-a8db-d6eb5bd56586%40continuum.io.

For more options, visit https://groups.google.com/a/continuum.io/d/optout.

<2019-01-10_10-31-12.png>

Hi,

First of all, --num-procs would not help a single user session be any faster. --num-procs is only useful when you have multiple users, so that each user session might utilize a separate process. If this is what you are after, then I forgot another possible avenue that might be available is to use gunicorn as in this example:

https://github.com/bokeh/bokeh/blob/master/examples/howto/server_embed/flask_gunicorn_embed.py

Assuming gunicorn works on Windows, which AFAIK it does, that may be simpler than setting up a proxy for load balancing.

However, if instead you mean you want to speed up a single session, then none of the above is relevant. Bokeh is not a magic bullet, it cannot make a computation that takes 30 seconds take less than 30 seconds. So there are only a few things to consider:

* Is there expensive work that can be done one-time/up-front? If so, you can use lifecycle hooks to do the work at server startup. See this example that uses a lifecycle hook to only do an expensive audio sample and FFT in one place, for all sessions to read:

https://github.com/bokeh/bokeh/tree/master/examples/app/spectrogram

* Is there something about the structure of your app that is causing unnecessary duplication of work? Quite possibly, but it's not really possible to speculate without real investigation on running code. As a simply start, you can try things like putting print statements in expensive or long-running tasks, to see if they are executing more than they should be.

* Is the app doing the minimum work, and the work just takes that long? Then there is nothing to do, at least on the Bokeh side of things. Bokeh doesn't make compute needs disappear. The only thing you can do is try to find or develop a more efficient algorithm or approach. Otherwise, what might be helpful if the compute can't be streamlined, is some kind a busy/wait indicator to users. That is still an open issue for Bokeh itself, but the issue also has a possible workaround for now:

Add busy/working spinner · Issue #3393 · bokeh/bokeh · GitHub

Thanks,

Bryan

On Jan 10, 2019, at 11:08, peng wang <[email protected]> wrote:

Hi there,

thanks for your feedback which is always valuable and useful. The purpose that I ask similar question again and again is about my project performance. I create the data analysis web application based on Bokeh + Flask, and plot many charts(below). You could imagine the code size. Unfortunately, as charts are added more and more, performance is worse and worse. That is, everytime when I launch the web application, it takes around 30 seconds to get web ready. and if I manipulated the dateranger slider, it takes so long to render all charts associated. I am tweaking on it and trying to find any good way to solve this issue. welcome any comments on it?

<2019-01-10_11-54-48.png>

<2019-01-10_11-55-10.png>

<2019-01-10_11-55-27.png>

<2019-01-10_11-54-30.png>

On Thursday, January 10, 2019 at 10:54:32 AM UTC-7, Bryan Van de ven wrote:

Hi Peng,There is nothing to do I am afraid, the message is self-explanatory. On windows, num_proc does not support any value other than 1. This is due to limitations in Tornado (which Bokeh is built on), that are outside our control. You can refer to the relevant GH issue and discussion here:

Multiprocessing in Windows is not available (ERROR:module 'os' has no attribute 'fork') · Issue #7229 · bokeh/bokeh · GitHubIf you need to "scale out" when running on Windows, then the only option I can suggest is to run multiple Bokeh server processes behind a load balancer e.g. Nginx can be used to accomplish this. A full deployment behind Nginx, using the least-connections balancing strategy, can be studied here:

GitHub - bokeh/demo.bokeh.org: Hosted Bokeh App Demos

There's alot of extraneous things related to the use of Salt for automating that deployment, so I will say the most relevant bit of the nginx config is this:

upstream demos {

least_conn; # Use Least Connections strategy

{% for i in range(0, NUM_SERVERS) %}

server 127.0.0.1:51{{ '%.2d' | format(i|int) }}; # Bokeh Server {{ i }}

{% endfor %}

}Which consolidates a bunch of bokeh servers, all started on different ports, into one "logical" endpoint.

Thanks,

Bryan

> On Jan 10, 2019, at 09:34, peng wang <[email protected]> wrote:

>

> Hi guys,

>

> Currently, I am working on my project performance improvement, and try to set num_procs. unfortunately, this error appears. Could someone help me out. thanks a lot

>

> <2019-01-10_10-31-12.png>

>

>

>

> --

> You received this message because you are subscribed to the Google Groups "Bokeh Discussion - Public" group.

> To unsubscribe from this group and stop receiving emails from it, send an email to bokeh+un...@continuum.io.

> To post to this group, send email to bo...@continuum.io.

> To view this discussion on the web visit https://groups.google.com/a/continuum.io/d/msgid/bokeh/db7b68f9-61ca-489e-a8db-d6eb5bd56586%40continuum.io\.

> For more options, visit https://groups.google.com/a/continuum.io/d/optout\.

> <2019-01-10_10-31-12.png>--

You received this message because you are subscribed to the Google Groups "Bokeh Discussion - Public" group.

To unsubscribe from this group and stop receiving emails from it, send an email to [email protected].

To post to this group, send email to [email protected].

To view this discussion on the web visit https://groups.google.com/a/continuum.io/d/msgid/bokeh/b4e8df00-0089-4130-8206-150d144884c0%40continuum.io\.

For more options, visit https://groups.google.com/a/continuum.io/d/optout\.

<2019-01-10_11-54-48.png><2019-01-10_11-55-10.png><2019-01-10_11-55-27.png><2019-01-10_11-54-30.png>

Thanks a lot for these suggestions. highly appreciated. just one quick question on this one.

Is there expensive work that can be done one-time/up-front? If so, you can use lifecycle hooks to do the work at server startup. See this example that uses a lifecycle hook to only do an expensive audio sample and FFT in one place, for all sessions to read:

[https://github.com/bokeh/bokeh/tree/master/examples/app/spectrogram](https://github.com/bokeh/bokeh/tree/master/examples/app/spectrogram)

Is there any trick to call “server_lifecycle.py”? like “bokeh server main.py server_lifecycle.py” or somethingelse? I use windows not unix-like

Peng

On Thursday, January 10, 2019 at 12:23:48 PM UTC-7, Bryan Van de ven wrote:

Hi,

First of all, --num-procs would not help a single user session be any faster. --num-procs is only useful when you have multiple users, so that each user session might utilize a separate process. If this is what you are after, then I forgot another possible avenue that might be available is to use gunicorn as in this example:

[https://github.com/bokeh/bokeh/blob/master/examples/howto/server_embed/flask_gunicorn_embed.py](https://github.com/bokeh/bokeh/blob/master/examples/howto/server_embed/flask_gunicorn_embed.py)Assuming gunicorn works on Windows, which AFAIK it does, that may be simpler than setting up a proxy for load balancing.

However, if instead you mean you want to speed up a single session, then none of the above is relevant. Bokeh is not a magic bullet, it cannot make a computation that takes 30 seconds take less than 30 seconds. So there are only a few things to consider:

Is there expensive work that can be done one-time/up-front? If so, you can use lifecycle hooks to do the work at server startup. See this example that uses a lifecycle hook to only do an expensive audio sample and FFT in one place, for all sessions to read:

[https://github.com/bokeh/bokeh/tree/master/examples/app/spectrogram](https://github.com/bokeh/bokeh/tree/master/examples/app/spectrogram)Is there something about the structure of your app that is causing unnecessary duplication of work? Quite possibly, but it’s not really possible to speculate without real investigation on running code. As a simply start, you can try things like putting print statements in expensive or long-running tasks, to see if they are executing more than they should be.

Is the app doing the minimum work, and the work just takes that long? Then there is nothing to do, at least on the Bokeh side of things. Bokeh doesn’t make compute needs disappear. The only thing you can do is try to find or develop a more efficient algorithm or approach. Otherwise, what might be helpful if the compute can’t be streamlined, is some kind a busy/wait indicator to users. That is still an open issue for Bokeh itself, but the issue also has a possible workaround for now:

[https://github.com/bokeh/bokeh/issues/3393](https://github.com/bokeh/bokeh/issues/3393)Thanks,

Bryan

On Jan 10, 2019, at 11:08, peng wang [email protected] wrote:

Hi there,

thanks for your feedback which is always valuable and useful. The purpose that I ask similar question again and again is about my project performance. I create the data analysis web application based on Bokeh + Flask, and plot many charts(below). You could imagine the code size. Unfortunately, as charts are added more and more, performance is worse and worse. That is, everytime when I launch the web application, it takes around 30 seconds to get web ready. and if I manipulated the dateranger slider, it takes so long to render all charts associated. I am tweaking on it and trying to find any good way to solve this issue. welcome any comments on it?

<2019-01-10_11-54-48.png>

<2019-01-10_11-55-10.png>

<2019-01-10_11-55-27.png>

<2019-01-10_11-54-30.png>

On Thursday, January 10, 2019 at 10:54:32 AM UTC-7, Bryan Van de ven wrote:

Hi Peng,

There is nothing to do I am afraid, the message is self-explanatory. On windows, num_proc does not support any value other than 1. This is due to limitations in Tornado (which Bokeh is built on), that are outside our control. You can refer to the relevant GH issue and discussion here:

[https://github.com/bokeh/bokeh/issues/7229](https://github.com/bokeh/bokeh/issues/7229)If you need to “scale out” when running on Windows, then the only option I can suggest is to run multiple Bokeh server processes behind a load balancer e.g. Nginx can be used to accomplish this. A full deployment behind Nginx, using the least-connections balancing strategy, can be studied here:

[https://github.com/bokeh/demo.bokeh.org](https://github.com/bokeh/demo.bokeh.org)There’s alot of extraneous things related to the use of Salt for automating that deployment, so I will say the most relevant bit of the nginx config is this:

upstream demos { least_conn; # Use Least Connections strategy {% for i in range(0, NUM_SERVERS) %} server 127.0.0.1:51{{ '%.2d' | format(i|int) }}; # Bokeh Server {{ i }} {% endfor %} }Which consolidates a bunch of bokeh servers, all started on different ports, into one “logical” endpoint.

Thanks,

Bryan

On Jan 10, 2019, at 09:34, peng wang [email protected] wrote:

Hi guys,

Currently, I am working on my project performance improvement, and try to set num_procs. unfortunately, this error appears. Could someone help me out. thanks a lot

<2019-01-10_10-31-12.png>

–

You received this message because you are subscribed to the Google Groups “Bokeh Discussion - Public” group.

To unsubscribe from this group and stop receiving emails from it, send an email to [email protected].

To post to this group, send email to [email protected].

To view this discussion on the web visit https://groups.google.com/a/continuum.io/d/msgid/bokeh/db7b68f9-61ca-489e-a8db-d6eb5bd56586%40continuum.io.

For more options, visit https://groups.google.com/a/continuum.io/d/optout.

<2019-01-10_10-31-12.png>–

You received this message because you are subscribed to the Google Groups “Bokeh Discussion - Public” group.To unsubscribe from this group and stop receiving emails from it, send an email to [email protected].

To post to this group, send email to [email protected].

To view this discussion on the web visit https://groups.google.com/a/continuum.io/d/msgid/bokeh/b4e8df00-0089-4130-8206-150d144884c0%40continuum.io.

For more options, visit https://groups.google.com/a/continuum.io/d/optout.

<2019-01-10_11-54-48.png><2019-01-10_11-55-10.png><2019-01-10_11-55-27.png><2019-01-10_11-54-30.png>

Hi,

There are various possibilities, but by far the simplest is to have a "directory format" app with a main.py and server_lifecycle.py in the directory:

https://bokeh.pydata.org/en/latest/docs/user_guide/server.html#directory-format

Bokeh server — Bokeh 3.3.2 Documentation

Thanks,

Bryan

On Jan 10, 2019, at 12:48, peng wang <[email protected]> wrote:

Thanks a lot for these suggestions. highly appreciated. just one quick question on this one.

* Is there expensive work that can be done one-time/up-front? If so, you can use lifecycle hooks to do the work at server startup. See this example that uses a lifecycle hook to only do an expensive audio sample and FFT in one place, for all sessions to read:

https://github.com/bokeh/bokeh/tree/master/examples/app/spectrogram

Is there any trick to call "server_lifecycle.py"? like "bokeh server main.py server_lifecycle.py" or somethingelse? I use windows not unix-like

Peng

On Thursday, January 10, 2019 at 12:23:48 PM UTC-7, Bryan Van de ven wrote:

Hi,First of all, --num-procs would not help a single user session be any faster. --num-procs is only useful when you have multiple users, so that each user session might utilize a separate process. If this is what you are after, then I forgot another possible avenue that might be available is to use gunicorn as in this example:

https://github.com/bokeh/bokeh/blob/master/examples/howto/server_embed/flask_gunicorn_embed.py

Assuming gunicorn works on Windows, which AFAIK it does, that may be simpler than setting up a proxy for load balancing.

However, if instead you mean you want to speed up a single session, then none of the above is relevant. Bokeh is not a magic bullet, it cannot make a computation that takes 30 seconds take less than 30 seconds. So there are only a few things to consider:

* Is there expensive work that can be done one-time/up-front? If so, you can use lifecycle hooks to do the work at server startup. See this example that uses a lifecycle hook to only do an expensive audio sample and FFT in one place, for all sessions to read:

https://github.com/bokeh/bokeh/tree/master/examples/app/spectrogram

* Is there something about the structure of your app that is causing unnecessary duplication of work? Quite possibly, but it's not really possible to speculate without real investigation on running code. As a simply start, you can try things like putting print statements in expensive or long-running tasks, to see if they are executing more than they should be.

* Is the app doing the minimum work, and the work just takes that long? Then there is nothing to do, at least on the Bokeh side of things. Bokeh doesn't make compute needs disappear. The only thing you can do is try to find or develop a more efficient algorithm or approach. Otherwise, what might be helpful if the compute can't be streamlined, is some kind a busy/wait indicator to users. That is still an open issue for Bokeh itself, but the issue also has a possible workaround for now:

Add busy/working spinner · Issue #3393 · bokeh/bokeh · GitHub

Thanks,

Bryan

> On Jan 10, 2019, at 11:08, peng wang <[email protected]> wrote:

>

> Hi there,

>

> thanks for your feedback which is always valuable and useful. The purpose that I ask similar question again and again is about my project performance. I create the data analysis web application based on Bokeh + Flask, and plot many charts(below). You could imagine the code size. Unfortunately, as charts are added more and more, performance is worse and worse. That is, everytime when I launch the web application, it takes around 30 seconds to get web ready. and if I manipulated the dateranger slider, it takes so long to render all charts associated. I am tweaking on it and trying to find any good way to solve this issue. welcome any comments on it?

>

> <2019-01-10_11-54-48.png>

>

> <2019-01-10_11-55-10.png>

>

> <2019-01-10_11-55-27.png>

>

> <2019-01-10_11-54-30.png>

>

>

>

>

>

> On Thursday, January 10, 2019 at 10:54:32 AM UTC-7, Bryan Van de ven wrote:

> Hi Peng,

>

> There is nothing to do I am afraid, the message is self-explanatory. On windows, num_proc does not support any value other than 1. This is due to limitations in Tornado (which Bokeh is built on), that are outside our control. You can refer to the relevant GH issue and discussion here:

>

> Multiprocessing in Windows is not available (ERROR:module 'os' has no attribute 'fork') · Issue #7229 · bokeh/bokeh · GitHub

>

> If you need to "scale out" when running on Windows, then the only option I can suggest is to run multiple Bokeh server processes behind a load balancer e.g. Nginx can be used to accomplish this. A full deployment behind Nginx, using the least-connections balancing strategy, can be studied here:

>

> GitHub - bokeh/demo.bokeh.org: Hosted Bokeh App Demos

>

> There's alot of extraneous things related to the use of Salt for automating that deployment, so I will say the most relevant bit of the nginx config is this:

>

> upstream demos {

> least_conn; # Use Least Connections strategy

> {% for i in range(0, NUM_SERVERS) %}

> server 127.0.0.1:51{{ '%.2d' | format(i|int) }}; # Bokeh Server {{ i }}

> {% endfor %}

> }

>

> Which consolidates a bunch of bokeh servers, all started on different ports, into one "logical" endpoint.

>

> Thanks,

>

> Bryan

>

>

> > On Jan 10, 2019, at 09:34, peng wang <[email protected]> wrote:

> >

> > Hi guys,

> >

> > Currently, I am working on my project performance improvement, and try to set num_procs. unfortunately, this error appears. Could someone help me out. thanks a lot

> >

> > <2019-01-10_10-31-12.png>

> >

> >

> >

> > --

> > You received this message because you are subscribed to the Google Groups "Bokeh Discussion - Public" group.

> > To unsubscribe from this group and stop receiving emails from it, send an email to bokeh+un...@continuum.io.

> > To post to this group, send email to bo...@continuum.io.

> > To view this discussion on the web visit https://groups.google.com/a/continuum.io/d/msgid/bokeh/db7b68f9-61ca-489e-a8db-d6eb5bd56586%40continuum.io\.

> > For more options, visit https://groups.google.com/a/continuum.io/d/optout\.

> > <2019-01-10_10-31-12.png>

>

>

> --

> You received this message because you are subscribed to the Google Groups "Bokeh Discussion - Public" group.

> To unsubscribe from this group and stop receiving emails from it, send an email to bokeh+un...@continuum.io.

> To post to this group, send email to bo...@continuum.io.

> To view this discussion on the web visit https://groups.google.com/a/continuum.io/d/msgid/bokeh/b4e8df00-0089-4130-8206-150d144884c0%40continuum.io\.

> For more options, visit https://groups.google.com/a/continuum.io/d/optout\.

> <2019-01-10_11-54-48.png><2019-01-10_11-55-10.png><2019-01-10_11-55-27.png><2019-01-10_11-54-30.png>--

You received this message because you are subscribed to the Google Groups "Bokeh Discussion - Public" group.

To unsubscribe from this group and stop receiving emails from it, send an email to [email protected].

To post to this group, send email to [email protected].

To view this discussion on the web visit https://groups.google.com/a/continuum.io/d/msgid/bokeh/301afb08-0aca-405d-9694-f5ad33fdae7e%40continuum.io\.

For more options, visit https://groups.google.com/a/continuum.io/d/optout\.

and then? thanks

On Thursday, January 10, 2019 at 2:05:32 PM UTC-7, Bryan Van de ven wrote:

Hi,

There are various possibilities, but by far the simplest is to have a “directory format” app with a main.py and server_lifecycle.py in the directory:

[https://bokeh.pydata.org/en/latest/docs/user_guide/server.html#directory-format](https://bokeh.pydata.org/en/latest/docs/user_guide/server.html#directory-format) [https://bokeh.pydata.org/en/latest/docs/user_guide/server.html#lifecycle-hooks](https://bokeh.pydata.org/en/latest/docs/user_guide/server.html#lifecycle-hooks)Thanks,

Bryan

On Jan 10, 2019, at 12:48, peng wang [email protected] wrote:

Thanks a lot for these suggestions. highly appreciated. just one quick question on this one.

Is there expensive work that can be done one-time/up-front? If so, you can use lifecycle hooks to do the work at server startup. See this example that uses a lifecycle hook to only do an expensive audio sample and FFT in one place, for all sessions to read:

[https://github.com/bokeh/bokeh/tree/master/examples/app/spectrogram](https://github.com/bokeh/bokeh/tree/master/examples/app/spectrogram)Is there any trick to call “server_lifecycle.py”? like “bokeh server main.py server_lifecycle.py” or somethingelse? I use windows not unix-like

Peng

On Thursday, January 10, 2019 at 12:23:48 PM UTC-7, Bryan Van de ven wrote:

Hi,

First of all, --num-procs would not help a single user session be any faster. --num-procs is only useful when you have multiple users, so that each user session might utilize a separate process. If this is what you are after, then I forgot another possible avenue that might be available is to use gunicorn as in this example:

[https://github.com/bokeh/bokeh/blob/master/examples/howto/server_embed/flask_gunicorn_embed.py](https://github.com/bokeh/bokeh/blob/master/examples/howto/server_embed/flask_gunicorn_embed.py)Assuming gunicorn works on Windows, which AFAIK it does, that may be simpler than setting up a proxy for load balancing.

However, if instead you mean you want to speed up a single session, then none of the above is relevant. Bokeh is not a magic bullet, it cannot make a computation that takes 30 seconds take less than 30 seconds. So there are only a few things to consider:

Is there expensive work that can be done one-time/up-front? If so, you can use lifecycle hooks to do the work at server startup. See this example that uses a lifecycle hook to only do an expensive audio sample and FFT in one place, for all sessions to read:

[https://github.com/bokeh/bokeh/tree/master/examples/app/spectrogram](https://github.com/bokeh/bokeh/tree/master/examples/app/spectrogram)

Is there something about the structure of your app that is causing unnecessary duplication of work? Quite possibly, but it’s not really possible to speculate without real investigation on running code. As a simply start, you can try things like putting print statements in expensive or long-running tasks, to see if they are executing more than they should be.

Is the app doing the minimum work, and the work just takes that long? Then there is nothing to do, at least on the Bokeh side of things. Bokeh doesn’t make compute needs disappear. The only thing you can do is try to find or develop a more efficient algorithm or approach. Otherwise, what might be helpful if the compute can’t be streamlined, is some kind a busy/wait indicator to users. That is still an open issue for Bokeh itself, but the issue also has a possible workaround for now:

[https://github.com/bokeh/bokeh/issues/3393](https://github.com/bokeh/bokeh/issues/3393)Thanks,

Bryan

On Jan 10, 2019, at 11:08, peng wang [email protected] wrote:

Hi there,

thanks for your feedback which is always valuable and useful. The purpose that I ask similar question again and again is about my project performance. I create the data analysis web application based on Bokeh + Flask, and plot many charts(below). You could imagine the code size. Unfortunately, as charts are added more and more, performance is worse and worse. That is, everytime when I launch the web application, it takes around 30 seconds to get web ready. and if I manipulated the dateranger slider, it takes so long to render all charts associated. I am tweaking on it and trying to find any good way to solve this issue. welcome any comments on it?

<2019-01-10_11-54-48.png>

<2019-01-10_11-55-10.png>

<2019-01-10_11-55-27.png>

<2019-01-10_11-54-30.png>

On Thursday, January 10, 2019 at 10:54:32 AM UTC-7, Bryan Van de ven wrote:

Hi Peng,There is nothing to do I am afraid, the message is self-explanatory. On windows, num_proc does not support any value other than 1. This is due to limitations in Tornado (which Bokeh is built on), that are outside our control. You can refer to the relevant GH issue and discussion here:

[https://github.com/bokeh/bokeh/issues/7229](https://github.com/bokeh/bokeh/issues/7229)If you need to “scale out” when running on Windows, then the only option I can suggest is to run multiple Bokeh server processes behind a load balancer e.g. Nginx can be used to accomplish this. A full deployment behind Nginx, using the least-connections balancing strategy, can be studied here:

[https://github.com/bokeh/demo.bokeh.org](https://github.com/bokeh/demo.bokeh.org)There’s alot of extraneous things related to the use of Salt for automating that deployment, so I will say the most relevant bit of the nginx config is this:

upstream demos { least_conn; # Use Least Connections strategy {% for i in range(0, NUM_SERVERS) %} server 127.0.0.1:51{{ '%.2d' | format(i|int) }}; # Bokeh Server {{ i }} {% endfor %} }Which consolidates a bunch of bokeh servers, all started on different ports, into one “logical” endpoint.

Thanks,

Bryan

On Jan 10, 2019, at 09:34, peng wang [email protected] wrote:

Hi guys,

Currently, I am working on my project performance improvement, and try to set num_procs. unfortunately, this error appears. Could someone help me out. thanks a lot

<2019-01-10_10-31-12.png>

–

You received this message because you are subscribed to the Google Groups “Bokeh Discussion - Public” group.

To unsubscribe from this group and stop receiving emails from it, send an email to [email protected].

To post to this group, send email to [email protected].

To view this discussion on the web visit https://groups.google.com/a/continuum.io/d/msgid/bokeh/db7b68f9-61ca-489e-a8db-d6eb5bd56586%40continuum.io.

For more options, visit https://groups.google.com/a/continuum.io/d/optout.

<2019-01-10_10-31-12.png>–

You received this message because you are subscribed to the Google Groups “Bokeh Discussion - Public” group.

To unsubscribe from this group and stop receiving emails from it, send an email to [email protected].

To post to this group, send email to [email protected].

To view this discussion on the web visit https://groups.google.com/a/continuum.io/d/msgid/bokeh/b4e8df00-0089-4130-8206-150d144884c0%40continuum.io.

For more options, visit https://groups.google.com/a/continuum.io/d/optout.

<2019-01-10_11-54-48.png><2019-01-10_11-55-10.png><2019-01-10_11-55-27.png><2019-01-10_11-54-30.png>–

You received this message because you are subscribed to the Google Groups “Bokeh Discussion - Public” group.To unsubscribe from this group and stop receiving emails from it, send an email to [email protected].

To post to this group, send email to [email protected].

To view this discussion on the web visit https://groups.google.com/a/continuum.io/d/msgid/bokeh/301afb08-0aca-405d-9694-f5ad33fdae7e%40continuum.io.

For more options, visit https://groups.google.com/a/continuum.io/d/optout.

Hi,

I am not sure what you are asking for. The docs I linked to explain what to do, and how things need to be organized, and the example I linked earlier provides a concrete implementation you can study. That is why they were created. I could regurgitate all that here, but that is not a useful use of time. Please try to refer to those resources first, and make some attempt based on that information. If there are still issues, then you will be able to ask specific and pointed questions.

Thanks,

Bryan

On Jan 10, 2019, at 13:41, peng wang <[email protected]> wrote:

and then? thanks

On Thursday, January 10, 2019 at 2:05:32 PM UTC-7, Bryan Van de ven wrote:

Hi,There are various possibilities, but by far the simplest is to have a "directory format" app with a main.py and server_lifecycle.py in the directory:

https://bokeh.pydata.org/en/latest/docs/user_guide/server.html#directory-format

Bokeh server — Bokeh 3.3.2 DocumentationThanks,

Bryan

> On Jan 10, 2019, at 12:48, peng wang <[email protected]> wrote:

>

> Thanks a lot for these suggestions. highly appreciated. just one quick question on this one.

>

> * Is there expensive work that can be done one-time/up-front? If so, you can use lifecycle hooks to do the work at server startup. See this example that uses a lifecycle hook to only do an expensive audio sample and FFT in one place, for all sessions to read:

>

> https://github.com/bokeh/bokeh/tree/master/examples/app/spectrogram

>

> Is there any trick to call "server_lifecycle.py"? like "bokeh server main.py server_lifecycle.py" or somethingelse? I use windows not unix-like

>

> Peng

>

> On Thursday, January 10, 2019 at 12:23:48 PM UTC-7, Bryan Van de ven wrote:

> Hi,

>

> First of all, --num-procs would not help a single user session be any faster. --num-procs is only useful when you have multiple users, so that each user session might utilize a separate process. If this is what you are after, then I forgot another possible avenue that might be available is to use gunicorn as in this example:

>

> https://github.com/bokeh/bokeh/blob/master/examples/howto/server_embed/flask_gunicorn_embed.py

>

> Assuming gunicorn works on Windows, which AFAIK it does, that may be simpler than setting up a proxy for load balancing.

>

> However, if instead you mean you want to speed up a single session, then none of the above is relevant. Bokeh is not a magic bullet, it cannot make a computation that takes 30 seconds take less than 30 seconds. So there are only a few things to consider:

>

> * Is there expensive work that can be done one-time/up-front? If so, you can use lifecycle hooks to do the work at server startup. See this example that uses a lifecycle hook to only do an expensive audio sample and FFT in one place, for all sessions to read:

>

> https://github.com/bokeh/bokeh/tree/master/examples/app/spectrogram

>

> * Is there something about the structure of your app that is causing unnecessary duplication of work? Quite possibly, but it's not really possible to speculate without real investigation on running code. As a simply start, you can try things like putting print statements in expensive or long-running tasks, to see if they are executing more than they should be.

>

> * Is the app doing the minimum work, and the work just takes that long? Then there is nothing to do, at least on the Bokeh side of things. Bokeh doesn't make compute needs disappear. The only thing you can do is try to find or develop a more efficient algorithm or approach. Otherwise, what might be helpful if the compute can't be streamlined, is some kind a busy/wait indicator to users. That is still an open issue for Bokeh itself, but the issue also has a possible workaround for now:

>

> Add busy/working spinner · Issue #3393 · bokeh/bokeh · GitHub

>

> Thanks,

>

> Bryan

>

>

> > On Jan 10, 2019, at 11:08, peng wang <[email protected]> wrote:

> >

> > Hi there,

> >

> > thanks for your feedback which is always valuable and useful. The purpose that I ask similar question again and again is about my project performance. I create the data analysis web application based on Bokeh + Flask, and plot many charts(below). You could imagine the code size. Unfortunately, as charts are added more and more, performance is worse and worse. That is, everytime when I launch the web application, it takes around 30 seconds to get web ready. and if I manipulated the dateranger slider, it takes so long to render all charts associated. I am tweaking on it and trying to find any good way to solve this issue. welcome any comments on it?

> >

> > <2019-01-10_11-54-48.png>

> >

> > <2019-01-10_11-55-10.png>

> >

> > <2019-01-10_11-55-27.png>

> >

> > <2019-01-10_11-54-30.png>

> >

> >

> >

> >

> >

> > On Thursday, January 10, 2019 at 10:54:32 AM UTC-7, Bryan Van de ven wrote:

> > Hi Peng,

> >

> > There is nothing to do I am afraid, the message is self-explanatory. On windows, num_proc does not support any value other than 1. This is due to limitations in Tornado (which Bokeh is built on), that are outside our control. You can refer to the relevant GH issue and discussion here:

> >

> > Multiprocessing in Windows is not available (ERROR:module 'os' has no attribute 'fork') · Issue #7229 · bokeh/bokeh · GitHub

> >

> > If you need to "scale out" when running on Windows, then the only option I can suggest is to run multiple Bokeh server processes behind a load balancer e.g. Nginx can be used to accomplish this. A full deployment behind Nginx, using the least-connections balancing strategy, can be studied here:

> >

> > GitHub - bokeh/demo.bokeh.org: Hosted Bokeh App Demos

> >

> > There's alot of extraneous things related to the use of Salt for automating that deployment, so I will say the most relevant bit of the nginx config is this:

> >

> > upstream demos {

> > least_conn; # Use Least Connections strategy

> > {% for i in range(0, NUM_SERVERS) %}

> > server 127.0.0.1:51{{ '%.2d' | format(i|int) }}; # Bokeh Server {{ i }}

> > {% endfor %}

> > }

> >

> > Which consolidates a bunch of bokeh servers, all started on different ports, into one "logical" endpoint.

> >

> > Thanks,

> >

> > Bryan

> >

> >

> > > On Jan 10, 2019, at 09:34, peng wang <[email protected]> wrote:

> > >

> > > Hi guys,

> > >

> > > Currently, I am working on my project performance improvement, and try to set num_procs. unfortunately, this error appears. Could someone help me out. thanks a lot

> > >

> > > <2019-01-10_10-31-12.png>

> > >

> > >

> > >

> > > --

> > > You received this message because you are subscribed to the Google Groups "Bokeh Discussion - Public" group.

> > > To unsubscribe from this group and stop receiving emails from it, send an email to bokeh+un...@continuum.io.

> > > To post to this group, send email to bo...@continuum.io.

> > > To view this discussion on the web visit https://groups.google.com/a/continuum.io/d/msgid/bokeh/db7b68f9-61ca-489e-a8db-d6eb5bd56586%40continuum.io\.

> > > For more options, visit https://groups.google.com/a/continuum.io/d/optout\.

> > > <2019-01-10_10-31-12.png>

> >

> >

> > --

> > You received this message because you are subscribed to the Google Groups "Bokeh Discussion - Public" group.

> > To unsubscribe from this group and stop receiving emails from it, send an email to bokeh+un...@continuum.io.

> > To post to this group, send email to bo...@continuum.io.

> > To view this discussion on the web visit https://groups.google.com/a/continuum.io/d/msgid/bokeh/b4e8df00-0089-4130-8206-150d144884c0%40continuum.io\.

> > For more options, visit https://groups.google.com/a/continuum.io/d/optout\.

> > <2019-01-10_11-54-48.png><2019-01-10_11-55-10.png><2019-01-10_11-55-27.png><2019-01-10_11-54-30.png>

>

>

> --

> You received this message because you are subscribed to the Google Groups "Bokeh Discussion - Public" group.

> To unsubscribe from this group and stop receiving emails from it, send an email to bokeh+un...@continuum.io.

> To post to this group, send email to bo...@continuum.io.

> To view this discussion on the web visit https://groups.google.com/a/continuum.io/d/msgid/bokeh/301afb08-0aca-405d-9694-f5ad33fdae7e%40continuum.io\.

> For more options, visit https://groups.google.com/a/continuum.io/d/optout\.--

You received this message because you are subscribed to the Google Groups "Bokeh Discussion - Public" group.

To unsubscribe from this group and stop receiving emails from it, send an email to [email protected].

To post to this group, send email to [email protected].

To view this discussion on the web visit https://groups.google.com/a/continuum.io/d/msgid/bokeh/2a1f62fa-53d8-4aff-b3d2-9acbd5fd1637%40continuum.io\.

For more options, visit https://groups.google.com/a/continuum.io/d/optout\.

Hi,

I am not sure what you are asking for. The docs I linked to explain what to do, and how things need to be organized, and the example I linked earlier provides a concrete implementation you can study. That is why they were created. I could regurgitate all that here, but that is not a useful use of time. Please try to refer to those resources first, and make some attempt based on that information. If there are still issues, then you will be able to ask specific and pointed questions.

Thanks,

Bryan

and then? thanks

Hi,

There are various possibilities, but by far the simplest is to have a “directory format” app with a main.py and server_lifecycle.py in the directory:

[https://bokeh.pydata.org/en/latest/docs/user_guide/server.html#directory-format](https://bokeh.pydata.org/en/latest/docs/user_guide/server.html#directory-format)[https://bokeh.pydata.org/en/latest/docs/user_guide/server.html#lifecycle-hooks](https://bokeh.pydata.org/en/latest/docs/user_guide/server.html#lifecycle-hooks)Thanks,

Bryan

Thanks a lot for these suggestions. highly appreciated. just one quick question on this one.

Is there expensive work that can be done one-time/up-front? If so, you can use lifecycle hooks to do the work at server startup. See this example that uses a lifecycle hook to only do an expensive audio sample and FFT in one place, for all sessions to read:

[https://github.com/bokeh/bokeh/tree/master/examples/app/spectrogram](https://github.com/bokeh/bokeh/tree/master/examples/app/spectrogram)Is there any trick to call “server_lifecycle.py”? like “bokeh server main.py server_lifecycle.py” or somethingelse? I use windows not unix-like

Peng

Hi,

First of all, --num-procs would not help a single user session be any faster. --num-procs is only useful when you have multiple users, so that each user session might utilize a separate process. If this is what you are after, then I forgot another possible avenue that might be available is to use gunicorn as in this example:

[https://github.com/bokeh/bokeh/blob/master/examples/howto/server_embed/flask_gunicorn_embed.py](https://github.com/bokeh/bokeh/blob/master/examples/howto/server_embed/flask_gunicorn_embed.py)Assuming gunicorn works on Windows, which AFAIK it does, that may be simpler than setting up a proxy for load balancing.

However, if instead you mean you want to speed up a single session, then none of the above is relevant. Bokeh is not a magic bullet, it cannot make a computation that takes 30 seconds take less than 30 seconds. So there are only a few things to consider:

Is there expensive work that can be done one-time/up-front? If so, you can use lifecycle hooks to do the work at server startup. See this example that uses a lifecycle hook to only do an expensive audio sample and FFT in one place, for all sessions to read:

[https://github.com/bokeh/bokeh/tree/master/examples/app/spectrogram](https://github.com/bokeh/bokeh/tree/master/examples/app/spectrogram)

Is there something about the structure of your app that is causing unnecessary duplication of work? Quite possibly, but it’s not really possible to speculate without real investigation on running code. As a simply start, you can try things like putting print statements in expensive or long-running tasks, to see if they are executing more than they should be.

Is the app doing the minimum work, and the work just takes that long? Then there is nothing to do, at least on the Bokeh side of things. Bokeh doesn’t make compute needs disappear. The only thing you can do is try to find or develop a more efficient algorithm or approach. Otherwise, what might be helpful if the compute can’t be streamlined, is some kind a busy/wait indicator to users. That is still an open issue for Bokeh itself, but the issue also has a possible workaround for now:

[https://github.com/bokeh/bokeh/issues/3393](https://github.com/bokeh/bokeh/issues/3393)Thanks,

Bryan

Hi there,

thanks for your feedback which is always valuable and useful. The purpose that I ask similar question again and again is about my project performance. I create the data analysis web application based on Bokeh + Flask, and plot many charts(below). You could imagine the code size. Unfortunately, as charts are added more and more, performance is worse and worse. That is, everytime when I launch the web application, it takes around 30 seconds to get web ready. and if I manipulated the dateranger slider, it takes so long to render all charts associated. I am tweaking on it and trying to find any good way to solve this issue. welcome any comments on it?

<2019-01-10_11-54-48.png>

<2019-01-10_11-55-10.png>

<2019-01-10_11-55-27.png>

<2019-01-10_11-54-30.png>

Hi Peng,

There is nothing to do I am afraid, the message is self-explanatory. On windows, num_proc does not support any value other than 1. This is due to limitations in Tornado (which Bokeh is built on), that are outside our control. You can refer to the relevant GH issue and discussion here:

[https://github.com/bokeh/bokeh/issues/7229](https://github.com/bokeh/bokeh/issues/7229)If you need to “scale out” when running on Windows, then the only option I can suggest is to run multiple Bokeh server processes behind a load balancer e.g. Nginx can be used to accomplish this. A full deployment behind Nginx, using the least-connections balancing strategy, can be studied here:

[https://github.com/bokeh/demo.bokeh.org](https://github.com/bokeh/demo.bokeh.org)There’s alot of extraneous things related to the use of Salt for automating that deployment, so I will say the most relevant bit of the nginx config is this:

upstream demos { least_conn; # Use Least Connections strategy {% for i in range(0, NUM_SERVERS) %} server 127.0.0.1:51{{ '%.2d' | format(i|int) }}; # Bokeh Server {{ i }} {% endfor %} }Which consolidates a bunch of bokeh servers, all started on different ports, into one “logical” endpoint.

Thanks,

Bryan

Hi guys,

Currently, I am working on my project performance improvement, and try to set num_procs. unfortunately, this error appears. Could someone help me out. thanks a lot

<2019-01-10_10-31-12.png>

–

You received this message because you are subscribed to the Google Groups “Bokeh Discussion - Public” group.

To unsubscribe from this group and stop receiving emails from it, send an email to [email protected].

To post to this group, send email to [email protected].

To view this discussion on the web visit https://groups.google.com/a/continuum.io/d/msgid/bokeh/db7b68f9-61ca-489e-a8db-d6eb5bd56586%40continuum.io.

For more options, visit https://groups.google.com/a/continuum.io/d/optout.

<2019-01-10_10-31-12.png>–

You received this message because you are subscribed to the Google Groups “Bokeh Discussion - Public” group.

To unsubscribe from this group and stop receiving emails from it, send an email to [email protected].

To post to this group, send email to [email protected].

To view this discussion on the web visit https://groups.google.com/a/continuum.io/d/msgid/bokeh/b4e8df00-0089-4130-8206-150d144884c0%40continuum.io.

For more options, visit https://groups.google.com/a/continuum.io/d/optout.

<2019-01-10_11-54-48.png><2019-01-10_11-55-10.png><2019-01-10_11-55-27.png><2019-01-10_11-54-30.png>–

You received this message because you are subscribed to the Google Groups “Bokeh Discussion - Public” group.

To unsubscribe from this group and stop receiving emails from it, send an email to [email protected].

To post to this group, send email to [email protected].

To view this discussion on the web visit https://groups.google.com/a/continuum.io/d/msgid/bokeh/301afb08-0aca-405d-9694-f5ad33fdae7e%40continuum.io.

For more options, visit https://groups.google.com/a/continuum.io/d/optout.–

You received this message because you are subscribed to the Google Groups “Bokeh Discussion - Public” group.To unsubscribe from this group and stop receiving emails from it, send an email to [email protected].

To post to this group, send email to [email protected].

To view this discussion on the web visit https://groups.google.com/a/continuum.io/d/msgid/bokeh/2a1f62fa-53d8-4aff-b3d2-9acbd5fd1637%40continuum.io.

For more options, visit https://groups.google.com/a/continuum.io/d/optout.

On Thursday, January 10, 2019 at 3:11:07 PM UTC-7, Bryan Van de ven wrote:

On Jan 10, 2019, at 13:41, peng wang [email protected] wrote:

On Thursday, January 10, 2019 at 2:05:32 PM UTC-7, Bryan Van de ven wrote:On Jan 10, 2019, at 12:48, peng wang [email protected] wrote:

On Thursday, January 10, 2019 at 12:23:48 PM UTC-7, Bryan Van de ven wrote:On Jan 10, 2019, at 11:08, peng wang [email protected] wrote:

On Thursday, January 10, 2019 at 10:54:32 AM UTC-7, Bryan Van de ven wrote:On Jan 10, 2019, at 09:34, peng wang [email protected] wrote:

that is my fault.

this is my code for server start:

…

from DataVisualizationWebApp import bk_plotter as bk_plter

@app.route(‘/’, methods=[‘GET’])

def bkapp_page():

"""Renders the home page."""

url = 'http://localhost:5006/bk_plotter'

script = server_document(url = url)

return render_template("embed.html", script = script, template = "Flask")

def bk_worker():

server = Server({'/bk_plotter': bk_plter.plot_doc}, \

io_loop = IOLoop(), \

allow_websocket_origin = ["localhost:8011", "localhost:8022", "localhost:8033"], \

websocket_max_message_size = 9999999999 * 1024 * 1024)

server.start()

server.io_loop.start()

``

this is bk_plotter.py

def plot_doc(doc):

uHelper.mTicker = uHelper.customize_ticker()

uHelper.set_xAxis_ticker()

uHelper.reset_xAxis_ticker()

uHelper.rigs_combx.on_change(‘value’, uHelper.rigs_combx_change)

uHelper.jobs_combx.on_change(‘value’, uHelper.jobs_combx_change)

#uHelper.crewshift_combx.on_change(‘value’, uHelper.crewshift_combx_change)

uHelper.main_plot.x_range.js_on_change(‘start’, uHelper.ticker_cb)

uHelper.main_plot.x_range.js_on_change(‘end’, uHelper.ticker_cb)

uHelper.main_plot.js_on_event(events.Reset, uHelper.ticker_cb_reset)

uHelper.checkbox_group_1.on_change(‘active’, uHelper.checkbox_callback_1)

uHelper.checkbox_group_2.on_change(‘active’, uHelper.checkbox_callback_2)

uHelper.checkbox_group_3.on_change(‘active’, uHelper.checkbox_callback_3)

uHelper.main_plot.js_on_event(events.Tap, uHelper.hide_subplot_callback)

…

``

this is my server_lifecycle.py

def on_server_loaded(server_context):

''' If present, this function is called when the server first starts. '''

print ("on_server_loaded ")

def on_server_unloaded(server_context):

''' If present, this function is called when the server shuts down. '''

print ("$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$")

print("on_server_unloaded")

def on_session_created(session_context):

''' If present, this function is called when a session is created. '''

print ("-----------------------------------------------")

print ("-----------------------------------------------")

print ("-----------------------------------------------")

print ("-----------------------------------------------")

print ("-----------------------------------------------")

print ("-----------------------------------------------")

print("on_session_created")

def on_session_destroyed(session_context):

''' If present, this function is called when a session is closed. '''

print ("$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$")

print("on_session_destroyed")

``

when I launch my project in visual studio IDE, those four functions from server_lifecycle.py are never called. if you could let me know why and how. it would be much appreciated.

this is the project directory

On Thursday, January 10, 2019 at 3:11:07 PM UTC-7, Bryan Van de ven wrote:

Hi,

I am not sure what you are asking for. The docs I linked to explain what to do, and how things need to be organized, and the example I linked earlier provides a concrete implementation you can study. That is why they were created. I could regurgitate all that here, but that is not a useful use of time. Please try to refer to those resources first, and make some attempt based on that information. If there are still issues, then you will be able to ask specific and pointed questions.

Thanks,

Bryan

On Jan 10, 2019, at 13:41, peng wang [email protected] wrote:

and then? thanks

On Thursday, January 10, 2019 at 2:05:32 PM UTC-7, Bryan Van de ven wrote:

Hi,

There are various possibilities, but by far the simplest is to have a “directory format” app with a main.py and server_lifecycle.py in the directory:

[https://bokeh.pydata.org/en/latest/docs/user_guide/server.html#directory-format](https://bokeh.pydata.org/en/latest/docs/user_guide/server.html#directory-format)[https://bokeh.pydata.org/en/latest/docs/user_guide/server.html#lifecycle-hooks](https://bokeh.pydata.org/en/latest/docs/user_guide/server.html#lifecycle-hooks)Thanks,

Bryan

On Jan 10, 2019, at 12:48, peng wang [email protected] wrote:

Thanks a lot for these suggestions. highly appreciated. just one quick question on this one.

Is there expensive work that can be done one-time/up-front? If so, you can use lifecycle hooks to do the work at server startup. See this example that uses a lifecycle hook to only do an expensive audio sample and FFT in one place, for all sessions to read:

[https://github.com/bokeh/bokeh/tree/master/examples/app/spectrogram](https://github.com/bokeh/bokeh/tree/master/examples/app/spectrogram)Is there any trick to call “server_lifecycle.py”? like “bokeh server main.py server_lifecycle.py” or somethingelse? I use windows not unix-like

Peng

On Thursday, January 10, 2019 at 12:23:48 PM UTC-7, Bryan Van de ven wrote:

Hi,First of all, --num-procs would not help a single user session be any faster. --num-procs is only useful when you have multiple users, so that each user session might utilize a separate process. If this is what you are after, then I forgot another possible avenue that might be available is to use gunicorn as in this example:

[https://github.com/bokeh/bokeh/blob/master/examples/howto/server_embed/flask_gunicorn_embed.py](https://github.com/bokeh/bokeh/blob/master/examples/howto/server_embed/flask_gunicorn_embed.py)Assuming gunicorn works on Windows, which AFAIK it does, that may be simpler than setting up a proxy for load balancing.

However, if instead you mean you want to speed up a single session, then none of the above is relevant. Bokeh is not a magic bullet, it cannot make a computation that takes 30 seconds take less than 30 seconds. So there are only a few things to consider:

Is there expensive work that can be done one-time/up-front? If so, you can use lifecycle hooks to do the work at server startup. See this example that uses a lifecycle hook to only do an expensive audio sample and FFT in one place, for all sessions to read:

[https://github.com/bokeh/bokeh/tree/master/examples/app/spectrogram](https://github.com/bokeh/bokeh/tree/master/examples/app/spectrogram)

Is there something about the structure of your app that is causing unnecessary duplication of work? Quite possibly, but it’s not really possible to speculate without real investigation on running code. As a simply start, you can try things like putting print statements in expensive or long-running tasks, to see if they are executing more than they should be.

Is the app doing the minimum work, and the work just takes that long? Then there is nothing to do, at least on the Bokeh side of things. Bokeh doesn’t make compute needs disappear. The only thing you can do is try to find or develop a more efficient algorithm or approach. Otherwise, what might be helpful if the compute can’t be streamlined, is some kind a busy/wait indicator to users. That is still an open issue for Bokeh itself, but the issue also has a possible workaround for now:

[https://github.com/bokeh/bokeh/issues/3393](https://github.com/bokeh/bokeh/issues/3393)Thanks,

Bryan

On Jan 10, 2019, at 11:08, peng wang [email protected] wrote:

Hi there,

thanks for your feedback which is always valuable and useful. The purpose that I ask similar question again and again is about my project performance. I create the data analysis web application based on Bokeh + Flask, and plot many charts(below). You could imagine the code size. Unfortunately, as charts are added more and more, performance is worse and worse. That is, everytime when I launch the web application, it takes around 30 seconds to get web ready. and if I manipulated the dateranger slider, it takes so long to render all charts associated. I am tweaking on it and trying to find any good way to solve this issue. welcome any comments on it?

<2019-01-10_11-54-48.png>

<2019-01-10_11-55-10.png>

<2019-01-10_11-55-27.png>

<2019-01-10_11-54-30.png>

On Thursday, January 10, 2019 at 10:54:32 AM UTC-7, Bryan Van de ven wrote:

Hi Peng,There is nothing to do I am afraid, the message is self-explanatory. On windows, num_proc does not support any value other than 1. This is due to limitations in Tornado (which Bokeh is built on), that are outside our control. You can refer to the relevant GH issue and discussion here:

[https://github.com/bokeh/bokeh/issues/7229](https://github.com/bokeh/bokeh/issues/7229)If you need to “scale out” when running on Windows, then the only option I can suggest is to run multiple Bokeh server processes behind a load balancer e.g. Nginx can be used to accomplish this. A full deployment behind Nginx, using the least-connections balancing strategy, can be studied here:

[https://github.com/bokeh/demo.bokeh.org](https://github.com/bokeh/demo.bokeh.org)There’s alot of extraneous things related to the use of Salt for automating that deployment, so I will say the most relevant bit of the nginx config is this:

upstream demos { least_conn; # Use Least Connections strategy {% for i in range(0, NUM_SERVERS) %} server 127.0.0.1:51{{ '%.2d' | format(i|int) }}; # Bokeh Server {{ i }} {% endfor %} }Which consolidates a bunch of bokeh servers, all started on different ports, into one “logical” endpoint.

Thanks,

Bryan

On Jan 10, 2019, at 09:34, peng wang [email protected] wrote:

Hi guys,

Currently, I am working on my project performance improvement, and try to set num_procs. unfortunately, this error appears. Could someone help me out. thanks a lot

<2019-01-10_10-31-12.png>

–

You received this message because you are subscribed to the Google Groups “Bokeh Discussion - Public” group.

To unsubscribe from this group and stop receiving emails from it, send an email to [email protected].

To post to this group, send email to [email protected].

To view this discussion on the web visit https://groups.google.com/a/continuum.io/d/msgid/bokeh/db7b68f9-61ca-489e-a8db-d6eb5bd56586%40continuum.io.

For more options, visit https://groups.google.com/a/continuum.io/d/optout.

<2019-01-10_10-31-12.png>–

You received this message because you are subscribed to the Google Groups “Bokeh Discussion - Public” group.

To unsubscribe from this group and stop receiving emails from it, send an email to [email protected].

To post to this group, send email to [email protected].

To view this discussion on the web visit https://groups.google.com/a/continuum.io/d/msgid/bokeh/b4e8df00-0089-4130-8206-150d144884c0%40continuum.io.

For more options, visit https://groups.google.com/a/continuum.io/d/optout.

<2019-01-10_11-54-48.png><2019-01-10_11-55-10.png><2019-01-10_11-55-27.png><2019-01-10_11-54-30.png>–

You received this message because you are subscribed to the Google Groups “Bokeh Discussion - Public” group.

To unsubscribe from this group and stop receiving emails from it, send an email to [email protected].

To post to this group, send email to [email protected].

To view this discussion on the web visit https://groups.google.com/a/continuum.io/d/msgid/bokeh/301afb08-0aca-405d-9694-f5ad33fdae7e%40continuum.io.

For more options, visit https://groups.google.com/a/continuum.io/d/optout.–

You received this message because you are subscribed to the Google Groups “Bokeh Discussion - Public” group.To unsubscribe from this group and stop receiving emails from it, send an email to [email protected].

To post to this group, send email to [email protected].

To view this discussion on the web visit https://groups.google.com/a/continuum.io/d/msgid/bokeh/2a1f62fa-53d8-4aff-b3d2-9acbd5fd1637%40continuum.io.

For more options, visit https://groups.google.com/a/continuum.io/d/optout.

and also, I read through all links, I never see any clues that tell me how to invoke those four functions. Does it mean that those four functions are called automatically if I just follow the “directory format”?

On Thursday, January 10, 2019 at 3:11:07 PM UTC-7, Bryan Van de ven wrote:

Hi,

I am not sure what you are asking for. The docs I linked to explain what to do, and how things need to be organized, and the example I linked earlier provides a concrete implementation you can study. That is why they were created. I could regurgitate all that here, but that is not a useful use of time. Please try to refer to those resources first, and make some attempt based on that information. If there are still issues, then you will be able to ask specific and pointed questions.

Thanks,

Bryan

On Jan 10, 2019, at 13:41, peng wang [email protected] wrote:

and then? thanks

On Thursday, January 10, 2019 at 2:05:32 PM UTC-7, Bryan Van de ven wrote:

Hi,

There are various possibilities, but by far the simplest is to have a “directory format” app with a main.py and server_lifecycle.py in the directory:

[https://bokeh.pydata.org/en/latest/docs/user_guide/server.html#directory-format](https://bokeh.pydata.org/en/latest/docs/user_guide/server.html#directory-format)[https://bokeh.pydata.org/en/latest/docs/user_guide/server.html#lifecycle-hooks](https://bokeh.pydata.org/en/latest/docs/user_guide/server.html#lifecycle-hooks)Thanks,

Bryan

On Jan 10, 2019, at 12:48, peng wang [email protected] wrote:

Thanks a lot for these suggestions. highly appreciated. just one quick question on this one.

Is there expensive work that can be done one-time/up-front? If so, you can use lifecycle hooks to do the work at server startup. See this example that uses a lifecycle hook to only do an expensive audio sample and FFT in one place, for all sessions to read:

[https://github.com/bokeh/bokeh/tree/master/examples/app/spectrogram](https://github.com/bokeh/bokeh/tree/master/examples/app/spectrogram)Is there any trick to call “server_lifecycle.py”? like “bokeh server main.py server_lifecycle.py” or somethingelse? I use windows not unix-like

Peng

On Thursday, January 10, 2019 at 12:23:48 PM UTC-7, Bryan Van de ven wrote:

Hi,First of all, --num-procs would not help a single user session be any faster. --num-procs is only useful when you have multiple users, so that each user session might utilize a separate process. If this is what you are after, then I forgot another possible avenue that might be available is to use gunicorn as in this example:

[https://github.com/bokeh/bokeh/blob/master/examples/howto/server_embed/flask_gunicorn_embed.py](https://github.com/bokeh/bokeh/blob/master/examples/howto/server_embed/flask_gunicorn_embed.py)Assuming gunicorn works on Windows, which AFAIK it does, that may be simpler than setting up a proxy for load balancing.

However, if instead you mean you want to speed up a single session, then none of the above is relevant. Bokeh is not a magic bullet, it cannot make a computation that takes 30 seconds take less than 30 seconds. So there are only a few things to consider:

Is there expensive work that can be done one-time/up-front? If so, you can use lifecycle hooks to do the work at server startup. See this example that uses a lifecycle hook to only do an expensive audio sample and FFT in one place, for all sessions to read:

[https://github.com/bokeh/bokeh/tree/master/examples/app/spectrogram](https://github.com/bokeh/bokeh/tree/master/examples/app/spectrogram)

Is there something about the structure of your app that is causing unnecessary duplication of work? Quite possibly, but it’s not really possible to speculate without real investigation on running code. As a simply start, you can try things like putting print statements in expensive or long-running tasks, to see if they are executing more than they should be.

Is the app doing the minimum work, and the work just takes that long? Then there is nothing to do, at least on the Bokeh side of things. Bokeh doesn’t make compute needs disappear. The only thing you can do is try to find or develop a more efficient algorithm or approach. Otherwise, what might be helpful if the compute can’t be streamlined, is some kind a busy/wait indicator to users. That is still an open issue for Bokeh itself, but the issue also has a possible workaround for now:

[https://github.com/bokeh/bokeh/issues/3393](https://github.com/bokeh/bokeh/issues/3393)Thanks,

Bryan

On Jan 10, 2019, at 11:08, peng wang [email protected] wrote:

Hi there,

thanks for your feedback which is always valuable and useful. The purpose that I ask similar question again and again is about my project performance. I create the data analysis web application based on Bokeh + Flask, and plot many charts(below). You could imagine the code size. Unfortunately, as charts are added more and more, performance is worse and worse. That is, everytime when I launch the web application, it takes around 30 seconds to get web ready. and if I manipulated the dateranger slider, it takes so long to render all charts associated. I am tweaking on it and trying to find any good way to solve this issue. welcome any comments on it?

<2019-01-10_11-54-48.png>

<2019-01-10_11-55-10.png>

<2019-01-10_11-55-27.png>

<2019-01-10_11-54-30.png>

On Thursday, January 10, 2019 at 10:54:32 AM UTC-7, Bryan Van de ven wrote:

Hi Peng,There is nothing to do I am afraid, the message is self-explanatory. On windows, num_proc does not support any value other than 1. This is due to limitations in Tornado (which Bokeh is built on), that are outside our control. You can refer to the relevant GH issue and discussion here:

[https://github.com/bokeh/bokeh/issues/7229](https://github.com/bokeh/bokeh/issues/7229)If you need to “scale out” when running on Windows, then the only option I can suggest is to run multiple Bokeh server processes behind a load balancer e.g. Nginx can be used to accomplish this. A full deployment behind Nginx, using the least-connections balancing strategy, can be studied here:

[https://github.com/bokeh/demo.bokeh.org](https://github.com/bokeh/demo.bokeh.org)There’s alot of extraneous things related to the use of Salt for automating that deployment, so I will say the most relevant bit of the nginx config is this:

upstream demos { least_conn; # Use Least Connections strategy {% for i in range(0, NUM_SERVERS) %} server 127.0.0.1:51{{ '%.2d' | format(i|int) }}; # Bokeh Server {{ i }} {% endfor %} }Which consolidates a bunch of bokeh servers, all started on different ports, into one “logical” endpoint.

Thanks,

Bryan

On Jan 10, 2019, at 09:34, peng wang [email protected] wrote:

Hi guys,

Currently, I am working on my project performance improvement, and try to set num_procs. unfortunately, this error appears. Could someone help me out. thanks a lot

<2019-01-10_10-31-12.png>

–

You received this message because you are subscribed to the Google Groups “Bokeh Discussion - Public” group.

To unsubscribe from this group and stop receiving emails from it, send an email to [email protected].

To post to this group, send email to [email protected].

To view this discussion on the web visit https://groups.google.com/a/continuum.io/d/msgid/bokeh/db7b68f9-61ca-489e-a8db-d6eb5bd56586%40continuum.io.

For more options, visit https://groups.google.com/a/continuum.io/d/optout.

<2019-01-10_10-31-12.png>–

You received this message because you are subscribed to the Google Groups “Bokeh Discussion - Public” group.

To unsubscribe from this group and stop receiving emails from it, send an email to [email protected].

To post to this group, send email to [email protected].

To view this discussion on the web visit https://groups.google.com/a/continuum.io/d/msgid/bokeh/b4e8df00-0089-4130-8206-150d144884c0%40continuum.io.

For more options, visit https://groups.google.com/a/continuum.io/d/optout.

<2019-01-10_11-54-48.png><2019-01-10_11-55-10.png><2019-01-10_11-55-27.png><2019-01-10_11-54-30.png>–

You received this message because you are subscribed to the Google Groups “Bokeh Discussion - Public” group.

To unsubscribe from this group and stop receiving emails from it, send an email to [email protected].

To post to this group, send email to [email protected].

To view this discussion on the web visit https://groups.google.com/a/continuum.io/d/msgid/bokeh/301afb08-0aca-405d-9694-f5ad33fdae7e%40continuum.io.

For more options, visit https://groups.google.com/a/continuum.io/d/optout.–

You received this message because you are subscribed to the Google Groups “Bokeh Discussion - Public” group.To unsubscribe from this group and stop receiving emails from it, send an email to [email protected].

To post to this group, send email to [email protected].

To view this discussion on the web visit https://groups.google.com/a/continuum.io/d/msgid/bokeh/2a1f62fa-53d8-4aff-b3d2-9acbd5fd1637%40continuum.io.

For more options, visit https://groups.google.com/a/continuum.io/d/optout.

server_lifecycle.py is a convenience that is only available to directory format bokeh apps, run using "bokeh serve" Since you are not using "bokeh serve" but are starting the Bokeh server yourself, programmatically (which was not evident or stated), you will have to do things the explicit way. Namely, make your own subclass of LifecycleHandler:

https://github.com/bokeh/bokeh/blob/master/bokeh/application/handlers/lifecycle.py

That implements whichever hooks you want to have implemented. Then you will needs to add this handler to your Application:

https://github.com/bokeh/bokeh/blob/master/bokeh/application/application.py#L142

Since you will need to manipulate the Application, you will have to be explicit about that, as well, intead of just passing the function directly:

bokeh_app = Application(FunctionHandler(bk_plter.plot_do))

bokeh_app.add(YourLifecycleSubclass())

server = Server({'/': bokeh_app}, ...)

Thanks,

Bryan

On Jan 10, 2019, at 14:27, peng wang <[email protected]> wrote:

that is my fault.

this is my code for server start:

...

from DataVisualizationWebApp import bk_plotter as bk_plter@app.route('/', methods=['GET'])

def bkapp_page():

"""Renders the home page."""

url = 'http://localhost:5006/bk_plotter'

script = server_document(url = url)

return render_template("embed.html", script = script, template = "Flask")def bk_worker():

server = Server({'/bk_plotter': bk_plter.plot_doc}, \

io_loop = IOLoop(), \

allow_websocket_origin = ["localhost:8011", "localhost:8022", "localhost:8033"], \

websocket_max_message_size = 9999999999 * 1024 * 1024)

server.start()

server.io_loop.start()this is bk_plotter.py

def plot_doc(doc):

uHelper.mTicker = uHelper.customize_ticker()

uHelper.set_xAxis_ticker()

uHelper.reset_xAxis_ticker()